Below, we list some of the most popular prompt editors in three general categories:

1. [Editors for improving productivity](#productivity-and-content-creation), for example, writers and marketers starting with a seed prompt and generating a more elaborate version.

2. [Prompt editors for converting natural language into creative assets](#creative-media-and-visual-design), or for refining such assets, like editing images using text prompts.

3. [Editors used when integrating prompts into production systems](#developing-llm-applications), for example, for building chatbots, copilots, and retrieval augmented generation (RAG) pipelines.

## Productivity and Content Creation

This category of prompt editors helps users write better prompts to get higher-quality but one-off outputs, with typical users being:

* Writers who want to overcome creative block, generate content ideas, and refine tone or phrasing for their work.

* Marketers who want to optimize prompts for ads, newsletters, SEO, and more.

### 1. Originality.ai: Best for Content Ideation

[Originality.ai](https://originality.ai/blog/ai-prompt-generator) offers a free prompt generation tool to help users create elaborate prompts for tasks like writing story elements, themes, and guidance.

These can jump-start the writing process and give users a starting point for generating varied written content quickly.

For example, you can ask it to produce a prompt for creating a marketing campaign slogan. After giving it some details, the tool outputs a prompt that’s actionable and well-structured, including a clear task description, details, output requirements, and sections for examples or response constraints.

The tool also links to a number of other AI writing helpers, like pages for creating acronyms, rewording documents, and paraphrasing passages.

The prompt generator is free to use and separate from the rest of Originality.ai’s subscription.

### 2. PromptPerfect: Best for Improving Prompt Quality

[PromptPerfect](https://promptperfect.jina.ai/) optimizes users’ prompts by taking their initial prompts as input, along with the output quality they want, number of iterations for refinement, and other settings. It helps users arrive quickly at an optimized prompt and aims to reduce the usual trial-and-error associated with prompt engineering.

The tool offers third-party plugins for a number of commercially available text generation models for ChatGPT, Claude, and Llama, as well as text-to-image models for Dall-E, Midjourney, and Stable Diffusion.

PromptPerfect also allows users to compare the outputs of various models to help them decide on the best model.

The platform allows users to make up to 10 requests a day for free, then charges around $20 monthly for up to 500 requests per day.

### 3. PromptBase: A Marketplace for Tested Prompts

[PromptBase](https://promptbase.com/) aims to save users time on prompt design and iteration by providing a curated library of tens of thousands of prompts for ChatGPT, Midjourney, DALL·E, Stable Diffusion, and others.

It offers creators, marketers, and designers access to tested prompts for everything from SEO blog posts and ad copy to digital art and graphic design.

It also supports secure transactions, buyer protection, and clear licensing, so businesses can reliably integrate purchased prompts into enterprise environments.

Prompts are sold individually, typically ranging from $2 to $5 each, with no subscription required.

## Creative Media and Visual Design

These editors convert natural language into usable, editable creative assets, and are used by creators who care about style, aesthetics, and iteration speed.

Typical user use cases include:

* Artists refining text-to-image prompts

* Designers iterating on variations of AI-generated visuals

* Social media content creation

### 4. Canva: Best for SMB Marketers and Enterprise Teams

[Canva’s AI Photo Editor](https://www.canva.com/features/ai-photo-editing/) lets users edit, enhance, and manipulate images with text prompts without needing advanced design skills.

Its editor uses AI to identify and separate photo elements, such as background, foreground, or individual objects, and allows targeted adjustments like brightness, contrast, or focus on specific parts.

It offers a number of other features, like the ability to erase backgrounds or generate entirely new backgrounds for images, enabling transparent overlays or new creative contexts without manual effort.

Canva’s basic features are free to use but you'll need a subscription to Canva Pro (around $120 per year) for more advanced features.

### 5. Phedra: A Tool for Solo Creators

[Phedra](https://phedra.ai/) is an image-editing platform that allows users to upload existing photos or graphics and then edit these via simple text or voice prompts in natural language. Rather than focusing on creating images from scratch, it enables AI-powered editing of user-provided images.

Users describe their desired changes in plain language, such as "Make the sky pink," "Remove the tree on the left," or "Add a vintage filter," and it understands commands typed or spoken in over 80 languages.

The software also allows removal of backgrounds, object manipulation like adding or removing elements, style transformations (e.g., pop art, oil painting), and 4K image upscaling for high-resolution output.

Phedra pricing starts at around $90 monthly on its annual plan, which also gets you the first three months free.

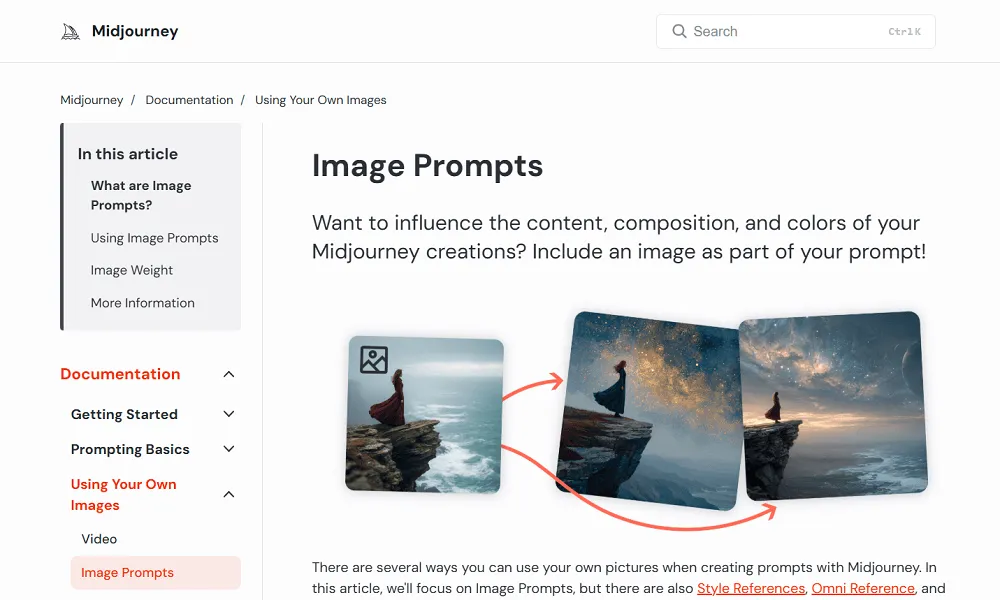

### 6. Midjourney: Best for Creative Agencies and Professional Artists

[Midjourney](https://www.midjourney.com/home) lets users upload images to Discord or another host and then edit these using text prompts via their URL. Multiple images in a single prompt can be edited by including several URLs separated by spaces. This blends elements of each image, allowing Midjourney to mix styles, colors, or subjects from different sources.

Users can edit prompts via the command line with `/imagine`, `/blend`, etc., and add modifiers to do things like adjust image weight, aspect ratio, and quality.

This allows them to set up workflows for rapid ideation and refinement, and provides prompts and seeds that can be reused for style consistency while generating batches and scaling asset production.

Midjourney offers monthly subscriptions starting at $10 for a limited number of jobs, going up to $120 monthly for more GPU time.

## Developing LLM Applications

Users of this category of prompt editors generally treat prompts like software components to be versioned, logged, and tested, since even small wording changes can lead to different outputs.

Reproducibility of prompts is therefore key to debugging, evaluating, and ensuring predictable behavior across edge cases and user inputs.

This approach contrasts with the other two groups of editors above, where iteration is more about trial and error, and success is judged by when the output finally feels “good enough.”

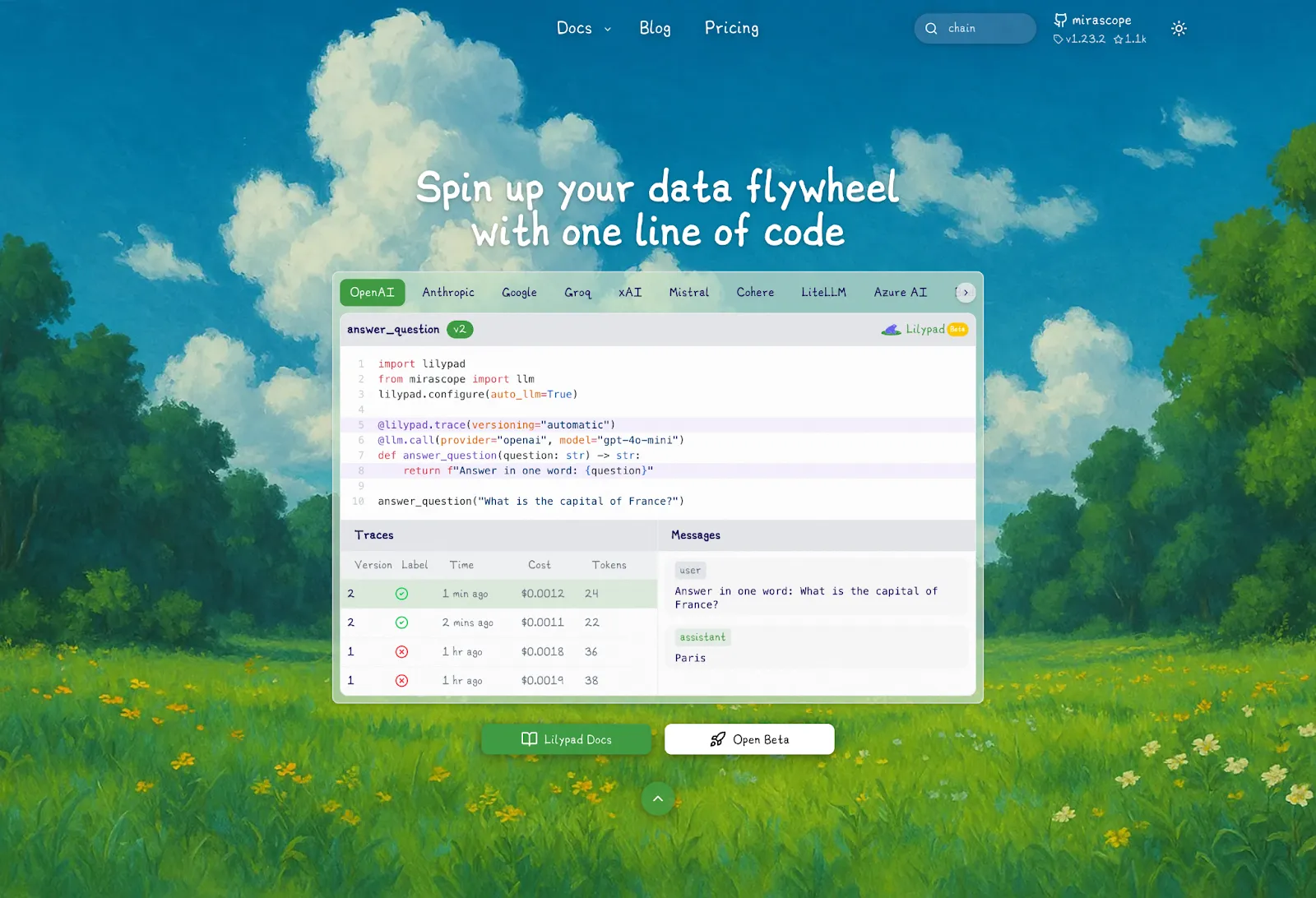

### 7. Lilypad: Best for Ensuring Prompt Reproducibility

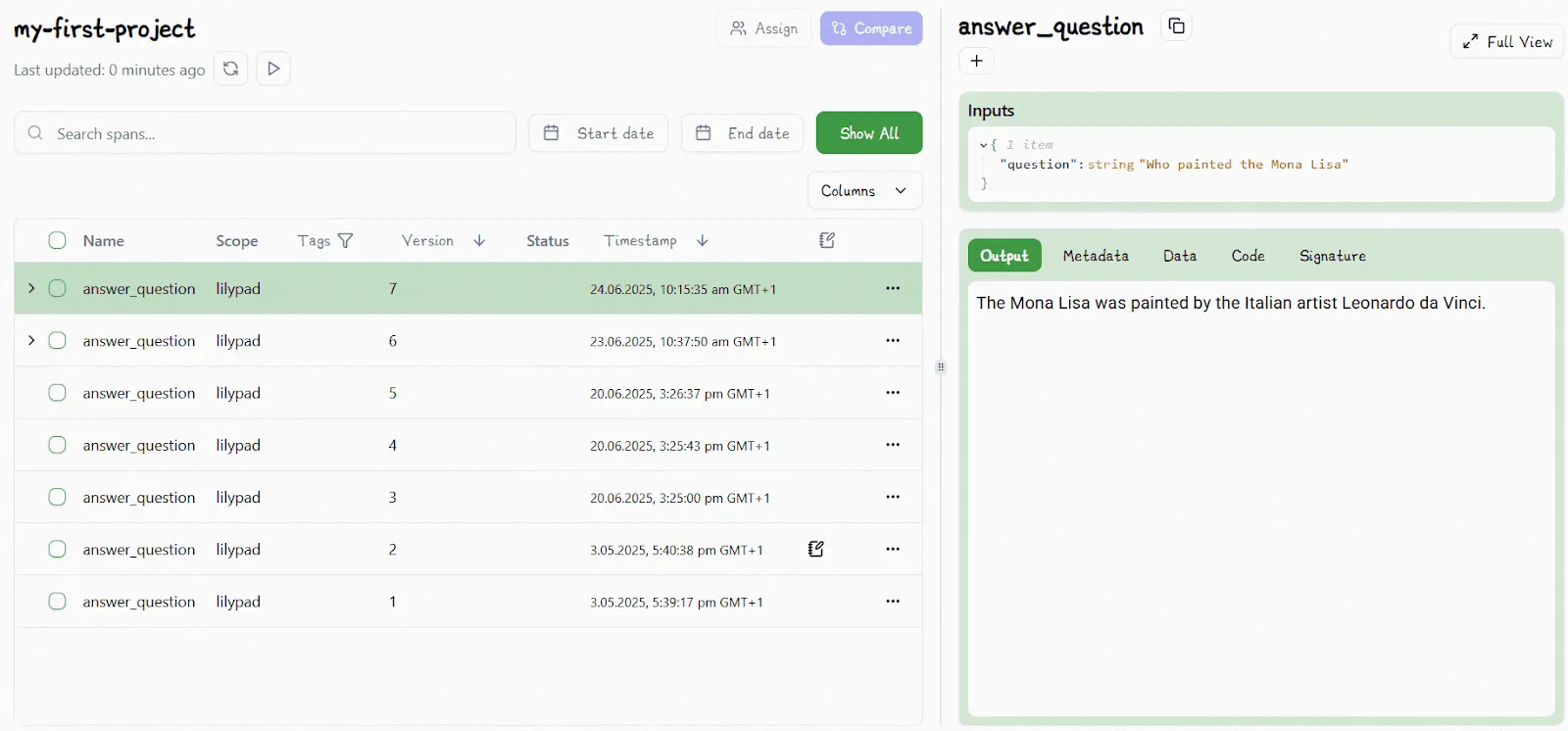

[Lilypad](/docs/lilypad) is an open source prompt engineering framework that lets users create, edit, and manage prompts through a playground interface that stays tightly synced with the application’s codebase. Unlike tools that store prompts separately, **Lilypad treats prompts as part of the code**, allowing it to version not just the prompt text, but the full context that shapes LLM behavior.

This includes model settings, conditional logic, input structure, and any other code that influences the output. As a result, Lilypad makes it easy to reproduce results, compare prompt versions, and improve performance systematically.

It supports a wide range of LLM providers, including OpenAI, Google, Anthropic, Azure, AWS Bedrock, and more. Below, we explore the different ways users can work with prompts within Lilypad.

#### Ensuring Changes Are Predictable and Traceable

Lilypad approaches prompt engineering as a structured optimization process, not just creative trial-and-error. Like software development, it emphasizes discipline, reproducibility, and version control.

[Prompt optimization](/blog/prompt-optimization) involves not only looking at what the LLM said; you need to also understand why it responded that way. This shifts prompt editing from a purely creative task to a more systematic one, where every change should be tracked, tested, and refined.

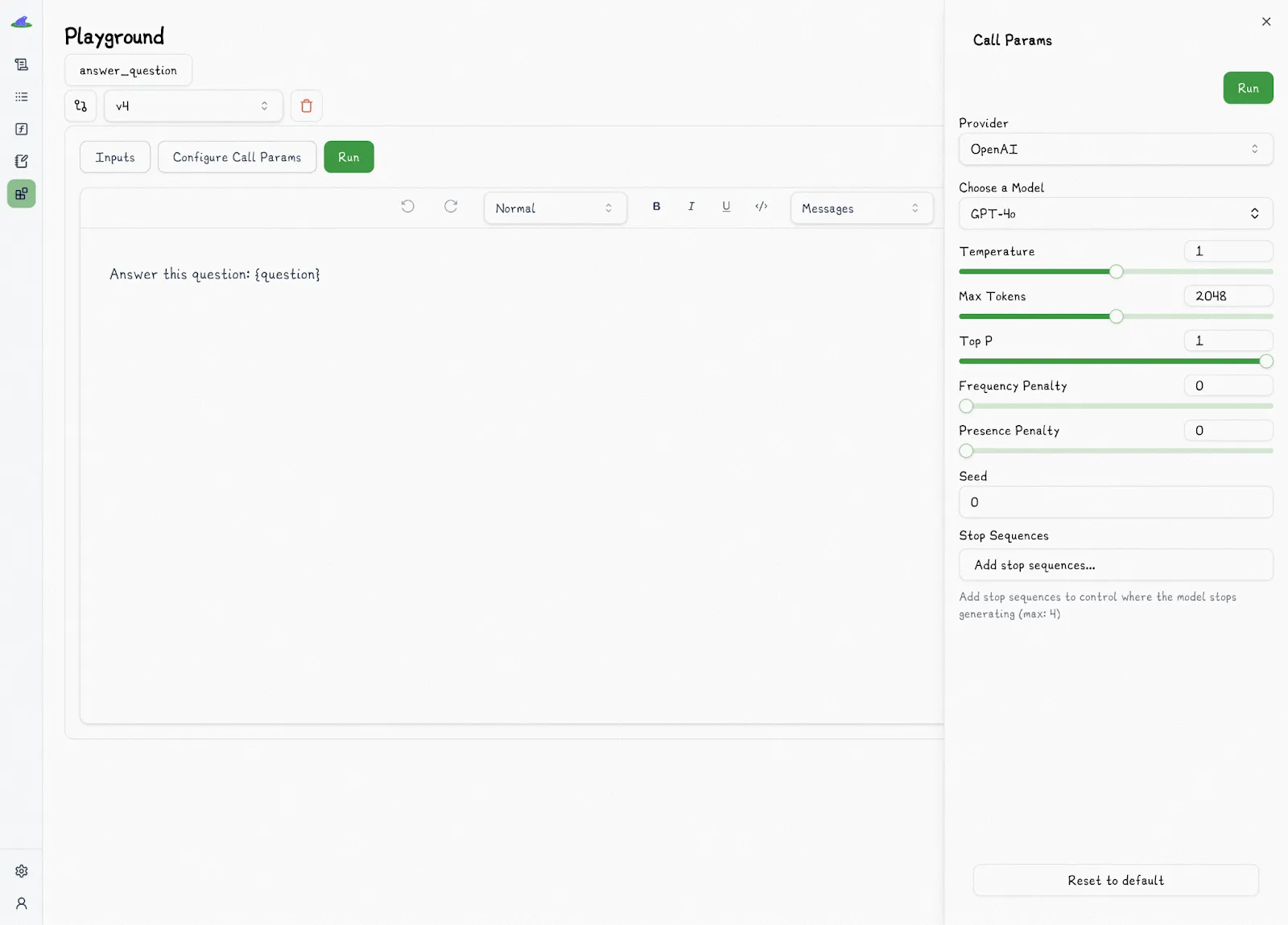

With Lilypad, prompts become part of an iterative feedback loop, powered by tools like annotations, comparisons, and A/B testing. To support this workflow, Lilypad offers a dedicated playground where users can write, test, and improve prompts, all while staying connected to the actual codebase.

The Lilypad playground supports markdown-based editing with typed variable placeholders, which helps prevent common errors like missing values, incorrect formats, or injection vulnerabilities.

Users can also directly adjust model settings like temperature or `top_p`. Combined, these features ensure all prompt edits remain consistent, traceable, and fully integrated into the application’s codebase.

However, prompt editor users cannot modify the underlying code, only the prompt:

Unlike other prompt editors, Lilypad automatically versions every change you make to a prompt without you having to hit “Save.”

This allows you to freely make changes and roll back to previous states as needed, ensuring full reproducibility even for small adjustments and allowing you to easily trace and understand every change and its impact on LLM behavior and outputs.

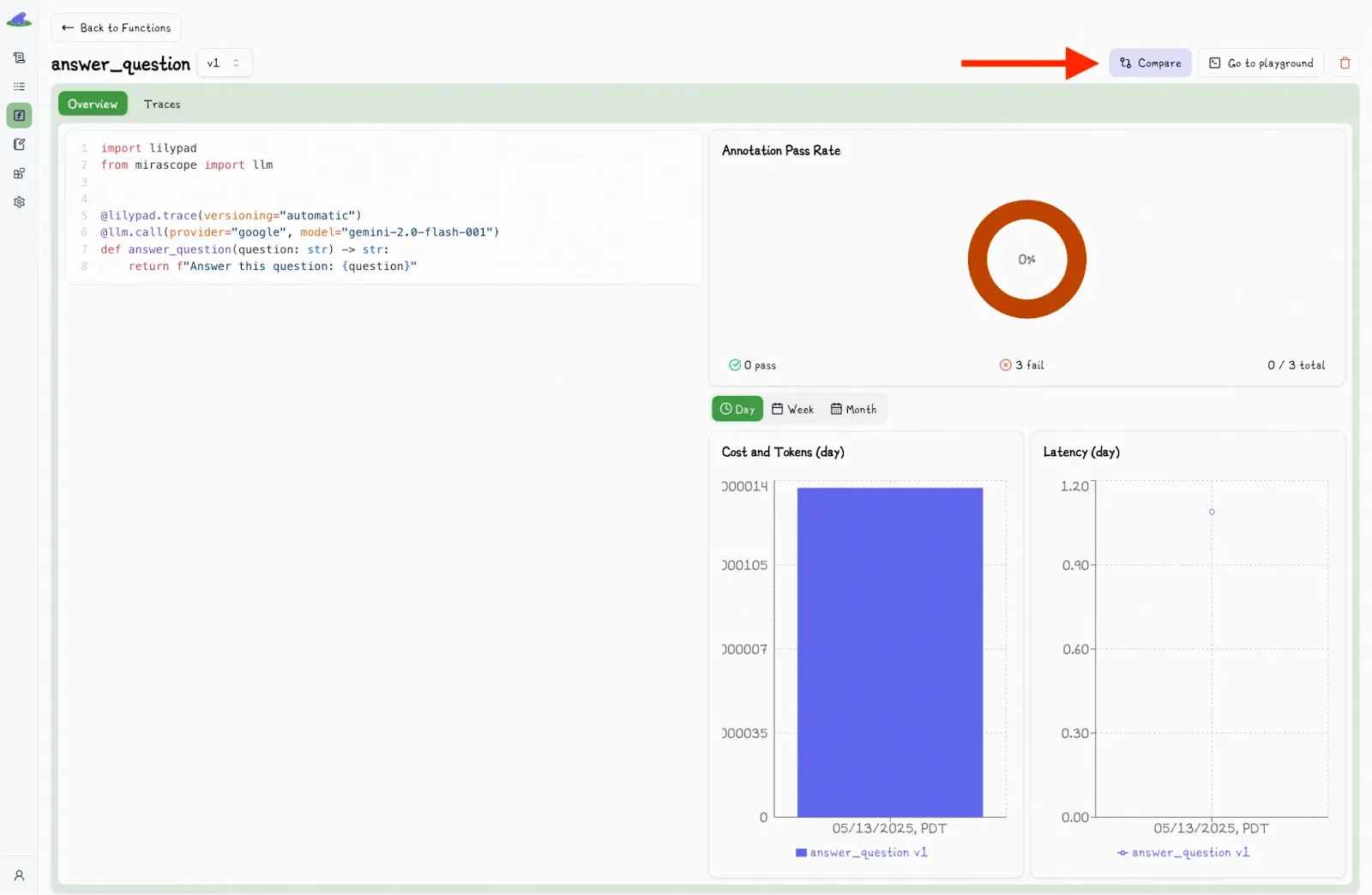

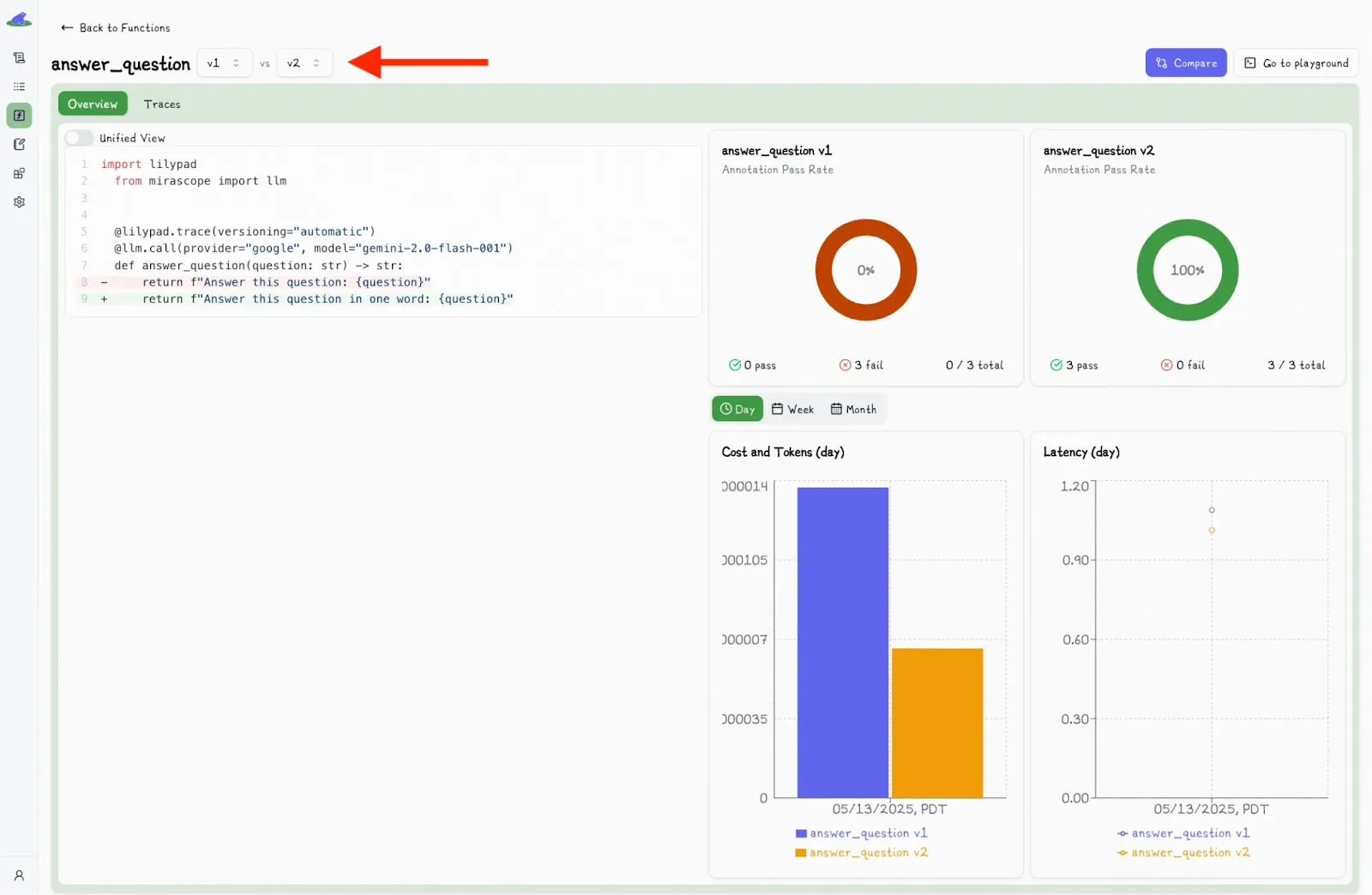

Lilypad’s [prompt management tool](/blog/prompt-management-tool) also allows you to compare different versions. Hitting the “Compare” button lets you see the differences between various versions of the code:

Clicking this displays a second dropdown menu, where you can select another version and view the differences side-by-side:

You can also view all kinds of stats around prompts and LLM outputs like cost, response times, latency, etc.

#### Testing Prompts Before Deployment

A [prompt testing framework](/blog/prompt-testing-framework) ensures an AI’s output meets quality standards before it’s deployed to production, and helps catch issues in the prompt that could lead to inaccurate, irrelevant, or otherwise problematic responses. It also confirms that the prompt delivers the right results for your intended audience and use case.

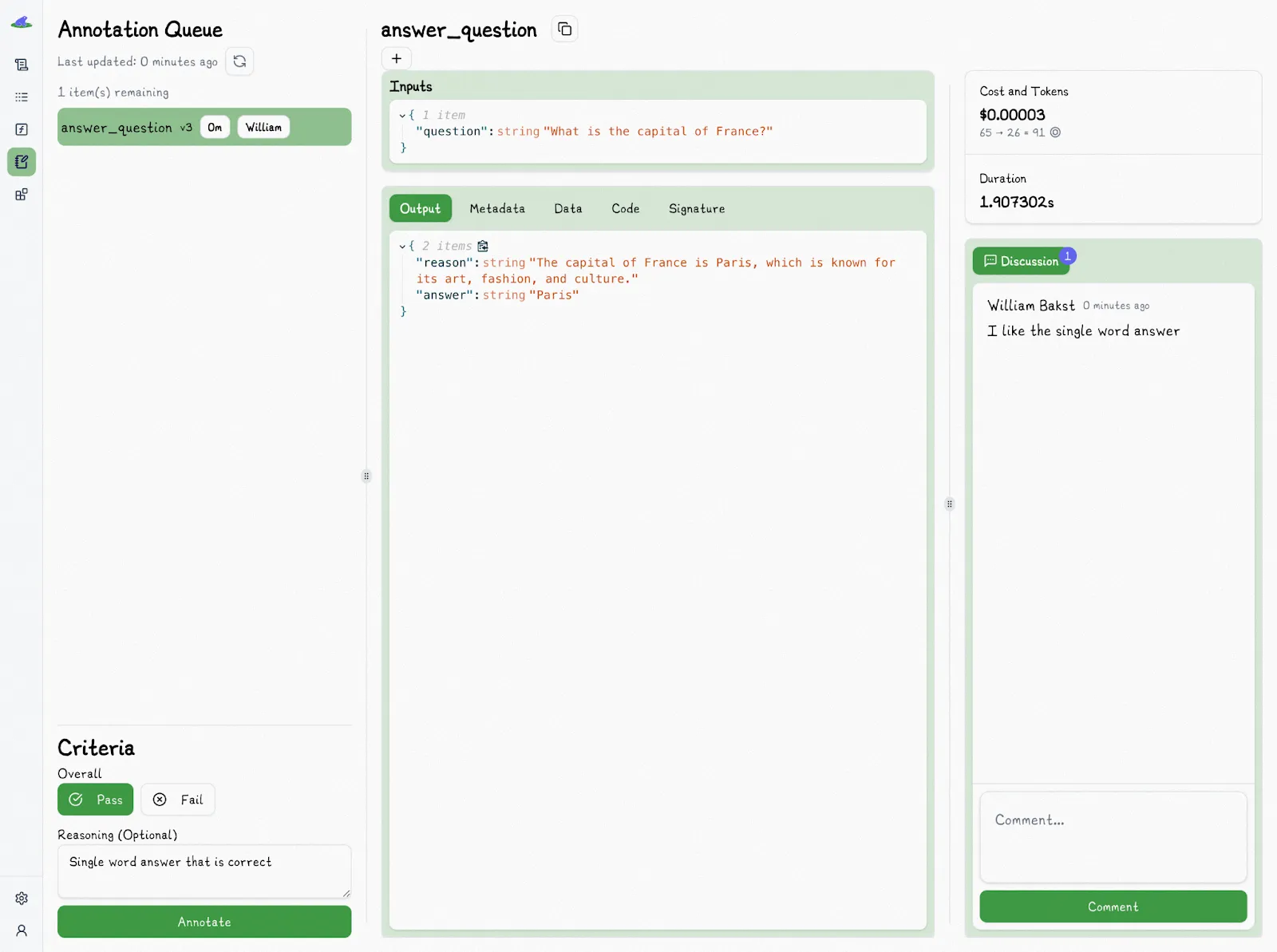

Lilypad allows users to test prompts and annotate LLM outputs accordingly, while comparing them alongside other versions.

Lilypad’s annotation system is built around a simple but important question: is the model’s output good enough for the given prompt? Reviewers label each output as either Pass or Fail, with the option to add reasoning.

This binary approach is often more practical than numeric rating systems (like a 1–5 scale), which can lead to inconsistent judgments, for example, one reviewer might rate a response a 4 and another a 5, even if they agree it’s acceptable. Pass/fail ratings reduce this ambiguity and make evaluations more actionable.

Annotations are managed through structured queues, where outputs can be reviewed and labeled. To minimize bias, existing labels and comments remain hidden until after a reviewer submits their own evaluation.

These annotations form high-quality datasets that can later support automated evaluation methods, such as using an [LLM-as-judge](/blog/llm-as-judge), ultimately reducing manual labeling and shifting human reviewers into a verification role.

You can [sign up for Lilypad](https://lilypad.mirascope.com/?_gl=1*o5ec17*_ga*NDUwMDMyNjI4LjE3MjgxOTExMzk.*_ga_DJHT1QG9GK*czE3NTUyNjUzMzQkbzYyNCRnMSR0MTc1NTI2NjE0NyRqNDQkbDAkaDA.) using your GitHub account and get started with tracing and versioning your LLM calls with only a few lines of code.

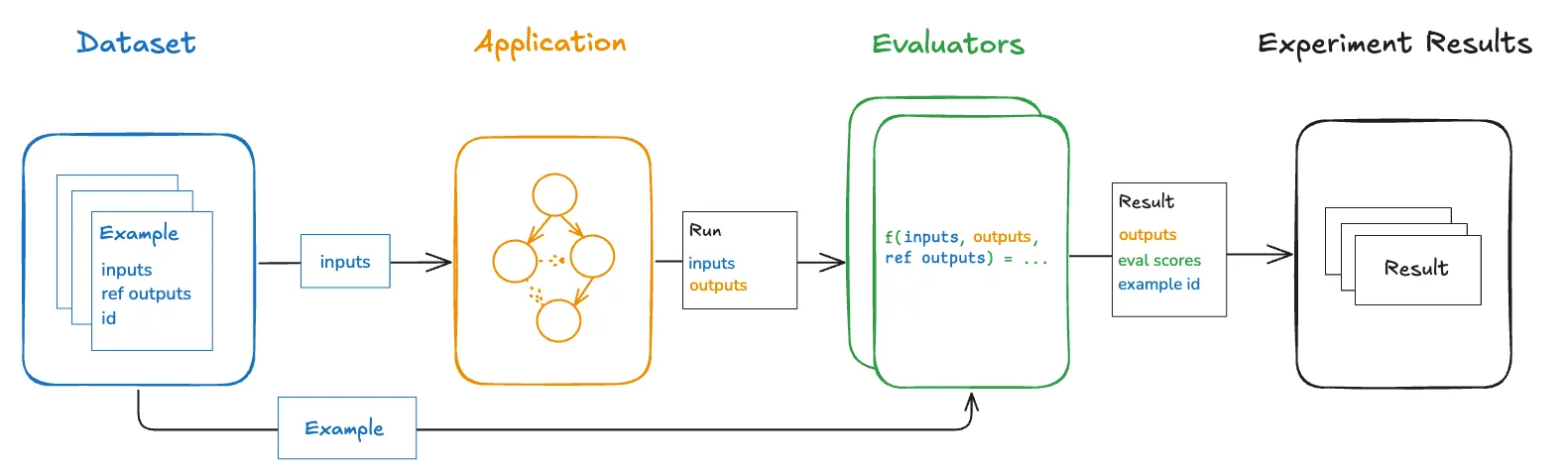

### 8. LangSmith: Best for Testing Prompts Against Datasets

[LangSmith](https://www.langchain.com/langsmith) is a closed source platform to create, edit, test, version, and manage prompts. It's a part of the LangChain ecosystem and works best with that framework.

The platform provides developers with a playground interface where they can edit prompt templates interactively, allowing them to modify prompt text, add or remove variables, modify prompt roles (e.g., system, human), and test outputs in the UI.

When using LangSmith, you must save prompts manually to iterate the version number, which is a Git-like identifier. However, the platform offers standard version control functionality like rolling back prompts and comparing them to earlier versions.

Also, LangSmith versions prompts separately from the code, which can lead to drift between what’s tested in the UI and what’s actually running in production if not carefully managed.

The platform lets users create test data sets against which they run different prompts and view outputs using different model parameters. Such tests can either be run automatically or via human review.

LangSmith also supports a wide variety of model providers, like Google, OpenAI, Azure, Amazon Bedrock, etc.

*See our latest article on [LangSmith vs Langfuse](/blog/langsmith-vs-langfuse)*.

## Bring Reproducibility to Prompt Editing

Prompt editing shouldn’t be guesswork. With versioning, comparisons, and evaluation built in, you can trace every change, reproduce outputs, improve prompts systematically, and do [advanced prompt engineering](/blog/advanced-prompt-engineering). Treat your prompts with the same rigor as your code; reproducible, testable, and ready for production.

Want to learn more about Lilypad? Check out our code samples in our [documentation](/docs/lilypad) or on [GitHub](https://github.com/mirascope/lilypad). Lilypad offers first-class support for [Mirascope](https://github.com/mirascope/mirascope), our lightweight toolkit for building [LLM agents](/blog/llm-agents).

Prompt Editor: The Best AI Tools for Marketers, Creators, and Engineers

2025-09-27 · 6 min read · By William Bakst